About This Project

PKM-KC is a vehicle for students to realize constructive ideas based on karsa and reason even though they have not yet reached the stage of providing perfect functional value and / or direct benefits to other parties. In order to participate in the PKM-KC 2023 program, we made an innovation of smart glasses called Sound-Degla.

Sound-Degla is a sophisticated device that uses artificial intelligence to help detect and translate sign language. Sound-Degla smart glasses emerge as a leading innovation in improving deaf people's communication through Bisindo sign language detection.

By adopting Convolutional Neural Network (CNN) and integration with Raspberry Pi 4, the product manages to achieve a satisfactory level of accuracy, promising an effective communication alternative for users. In a practical context, these glasses not only enhance the independence of deaf people but also contribute to the development of assistive technology at large. The potential for further development is wide open, with opportunities to improve the sign language model and expand the functionality of Sound-Degla smart glasses.

Our Team

Our team consists of 5 Informatics students, as follows.

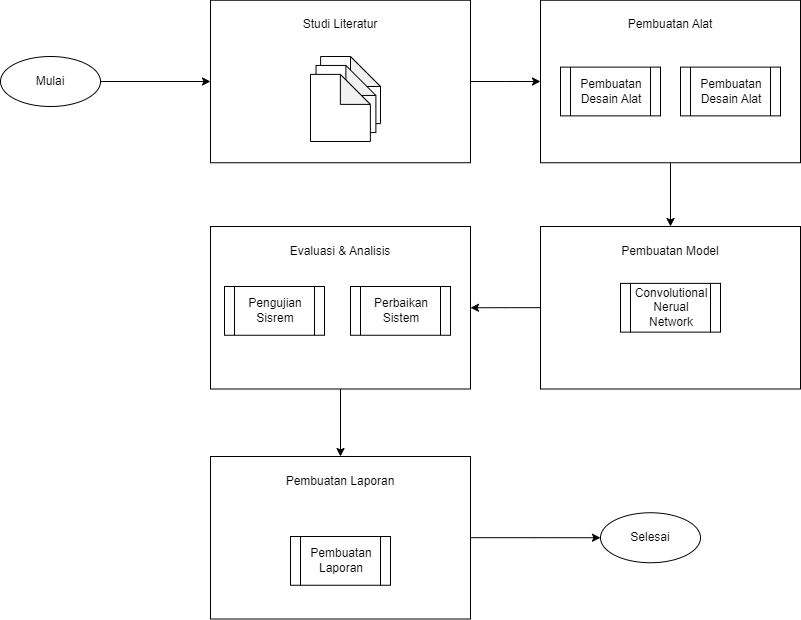

Implementation Stages

The implementation stage of this project is as shown below.

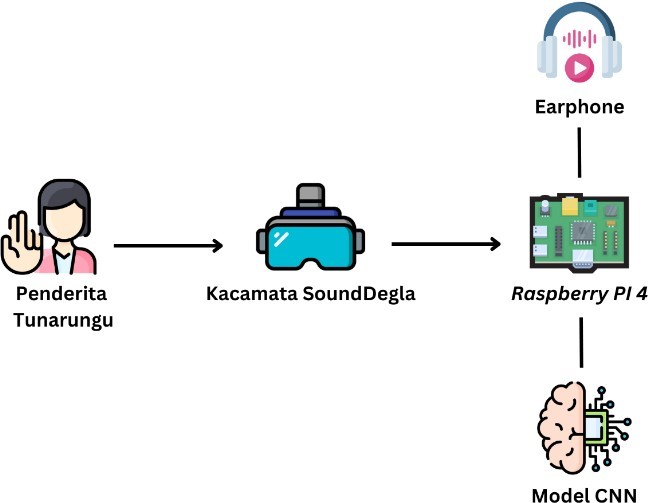

Tool Workflows

In this section, we will explain several flows that describe the steps in building the system. The designed tool utilizes glasses as a device equipped with a mini camera to capture hand movements (sign language) of deaf people. Input in the form of hand movements will be sent to the Raspberry Pi 4 and processed according to the prepared program, using the Convolutional Neural Network (CNN) model. Furthermore, the processed results will be integrated with the API. Then, the output in the form of voice translation of sign language will be delivered through earphones integrated in Sound-Degla glasses. The system workflow can be seen in the following figure.

Product Outcome

The product visualization of Sound-Degla glasses provides a direct view of their physical design and functionality. Through structure diagrams, interface mockups and depictions of daily use, users can easily understand how these glasses interact in a virtual environment. By including images of visual indicators, color variations, and aesthetic design, product explanations become clearer and support user understanding. The following are the product results of this project.

Conclusion

From the results of the project that has been carried out, Sound-Degla smart glasses offer an innovative solution to improve the communication of deaf people through Bisindo sign language detection. The application of Convolutional Neural Network (CNN) as a detection model and integration with Raspberry Pi 4 as an operating system contributed significantly in achieving the research objectives.

The success of these smart glasses in detecting sign language with a satisfactory level of accuracy shows great potential in providing a more effective communication alternative for deaf people. The practical implications of this research include improved communication for deaf people, enabling them to be more independent and actively participate in various situations. Contributions to assistive technology, especially in the use of sign language models and the integration of hardware such as Raspberry Pi and cameras, mark achievements that can be applied more widely.

In addition, there is potential for further development, both in improving the sign language model and expanding the functionality of Sound-Degla smart glasses. As such, this research opens the door for further innovations in supporting the lives of deaf people and expanding the scope of assistive technology. Overall, the Sound-Degla smart glasses promise to have a positive impact and make a meaningful contribution in advancing technological solutions for the deaf community.

Suggestions for further development in this case are sign language recognition using CNN models. The expected data can be more and varied, this is done so that the CNN model can classify well. Note in the application of the CNN model, the parameters used must be in accordance with the data owned and it is advisable to experiment with different hyperparameters. It is recommended to do repeated testing to find suitable results.